Still speed up your robot video demos for presentation?

Try  DemoSpeedup to accelerate your policy execution!

DemoSpeedup to accelerate your policy execution!

Abstract

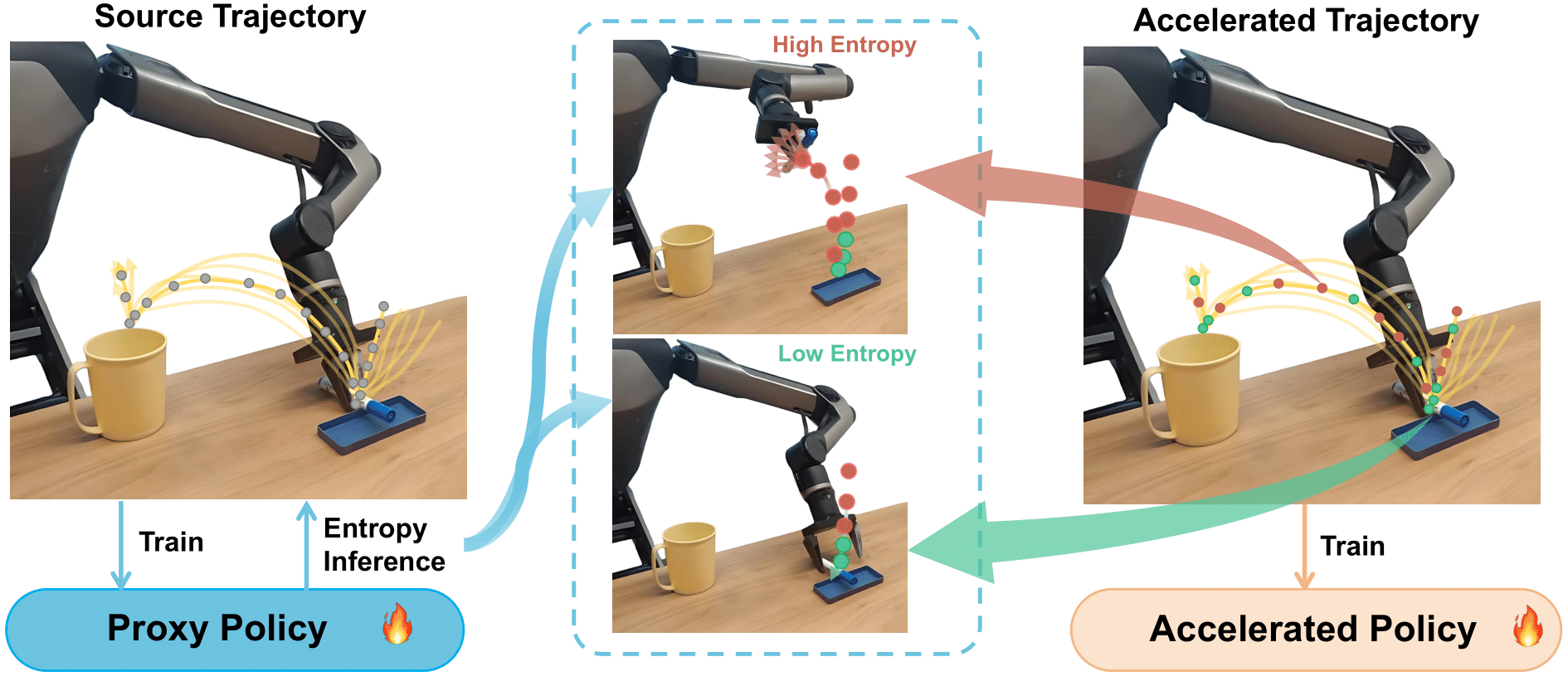

Imitation learning has shown great promise in robotic manipulation, but the policy's execution is often unsatisfactorily slow due to commonly tardy demonstrations collected by human operators. In this work, we present DemoSpeedup, a self-supervised method to accelerate visuomotor policy execution via entropy-guided demonstration acceleration. DemoSpeedup starts from training an arbitrary generative policy (e.g., ACT or Diffusion Policy) on normal-speed demonstrations, which serves as a per-frame action entropy estimator. The key insight is that frames with lower action entropy estimates call for more consistent policy behaviors, which often indicate the demands for higher-precision operations. In contrast, frames with higher entropy estimates correspond to more casual sections, and therefore can be more safely accelerated. Thus, we segment the original demonstrations according to the estimated entropy, and accelerate them by down-sampling at rates that increase with the entropy values. Trained with the speedup demonstrations, the resulting policies execute up to 3 times faster while maintaining the task completion performance. Interestingly, these policies could even achieve higher success rates than those trained with normal-speed demonstrations, due to the benefits of reduced decision-making horizons.

Method

DemoSpeedup utilizes a generative policy trained from original demonstrations to estimate conditional action entropy. Actions with high entropy (red points) are down-sampled at a higher rate while actions with low entropy (green points) are down-sampled at a lower rate.

Entropy Visualization

Estimated entropy and segmented precision level of collected demos from robot head camera (background is masked for clarity).

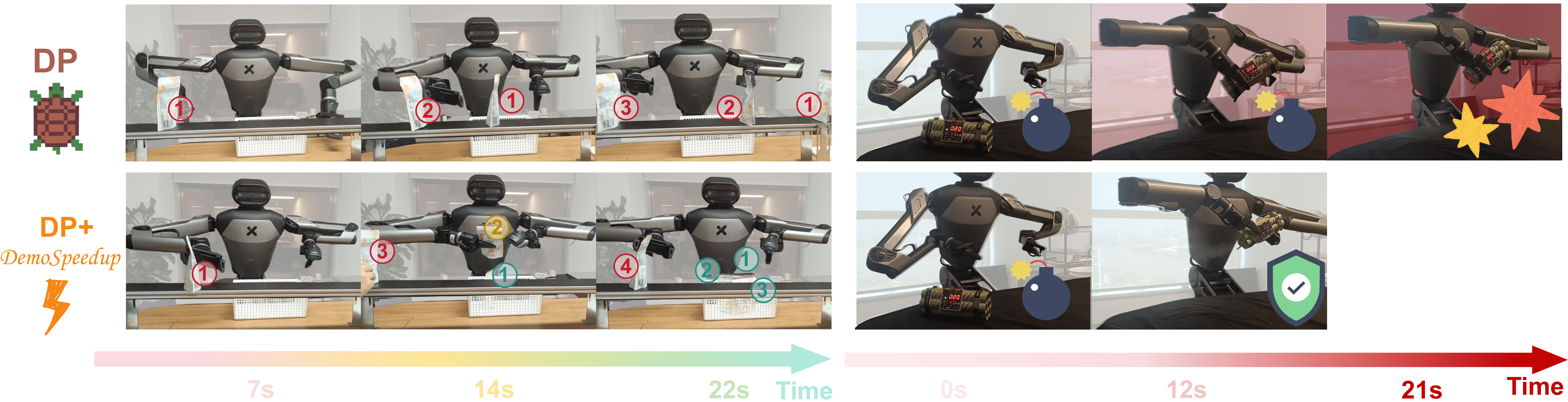

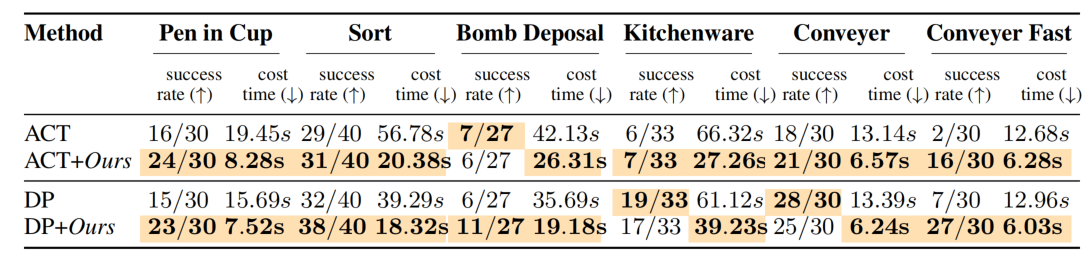

Real-world Experiments

All the videos are played in real time.

Pen in Cup

Sort

Kitchenware

Bomb deposal

Conveyer Fast

Sort

Kitchenware

Bomb deposal

Conveyer Fast

Kitchenware

Bomb deposal

Conveyer Fast

Bomb deposal

Conveyer Fast

Conveyer Fast

Quantitative results

The results demonstrate the efficiency of  DemoSpeedup in accelerating the speed of visuomotor policies while maintaining the success rate.

DemoSpeedup in accelerating the speed of visuomotor policies while maintaining the success rate.

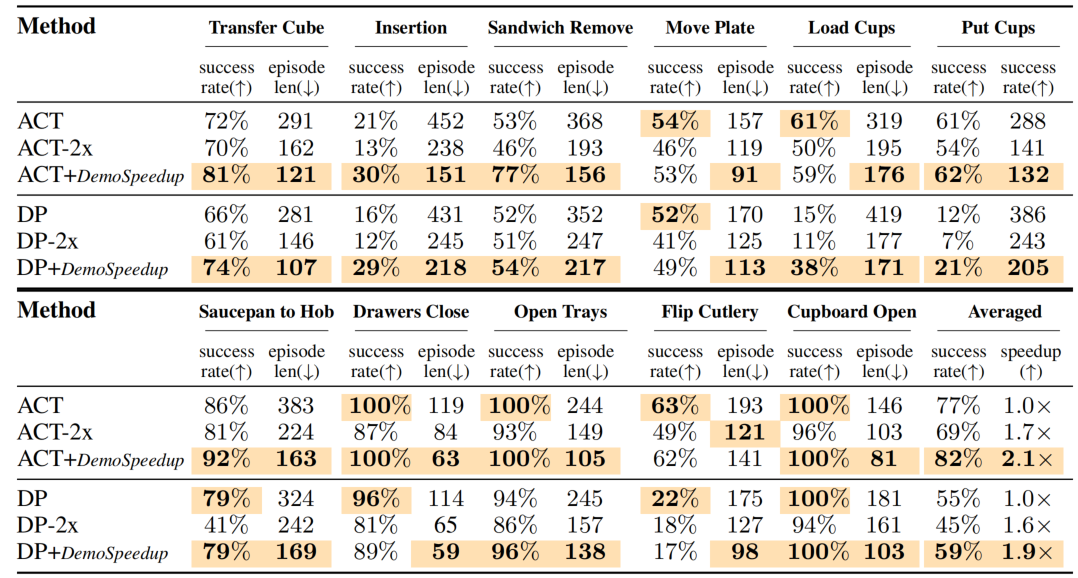

Simulation Experiments

We evaluate our approach on Aloha and Bigym, which contain high-precision controllers and human-collected datasets. Some simulation tasks with corresponding methods are displayed below.

Scope of application

DemoSpeedup is suitable for isomorphic teleoperation such as VR and kinematics-based teaching, since the robot's action data exhibits similar entropy patterns to human motions. However, it may be not suitable for non-isomorphic teleoperation data or script-generated data.

Above shows the entropy of a demo from DemoGen teleoperated by keyboard. In the left video, the upper left number is the entropy and the lower left number is the segmented precision('0' refers to Precise and '1' refers to Causal). Since the robot moves in a straight line in the air during keyboard-teleoperation, its motion has lower entropy in the air and higher entropy during contact-rich phases, which differs from human's pattern.

Above shows the entropy of script-generated trajectories from MetaWorld, in which there're no regular entropy patterns.